Google Search Console 2026 guide for AI search, indexing, UX & visibility. Learn how to win organic growth in 2026 with Pansofic Solutions. Get started.

Google Search Console (GSC) is also one of the most effective yet free-of-charge tools that can be offered to the owners of websites, developers, SEO specialists, and digital marketers. Although it has never been a rigid condition to be on Google Search , neglecting it in 2026 is a smart mistake.

There has been a fundamental change in search.

Among AI Overviews, generative answers, entity-based indexing, and zero-click SERPs, the businesses cease to compete on the rankings scale and compete on the interpretation, trust, and reuse by the systems of Google. Google Search Console is now in the middle of such a fight.

In the year 2026, GSC will do much more than a diagnostic dashboard. It has become a search intelligence site that exposes:

The way the AI of Google interprets what you write.

The exposure of AI and ranking directly through the user experience.

This guide divides the current Google Search Console into sections and reflects on its new features and reports, and provides some actionable tips that businesses can implement to stay competitive in an AI-led search space.

Google Search Console started to cause technical utility- it is mostly used to submit sitemaps, correct crawling errors, and troubleshoot indexing issues. In due course, it became an essential SEO tool. By 2026, it had grown to be more critical, a visibility governance platform.

Being mobile-first is no longer a matter of discussion- it is the default position of the web. Google tests your mobile version, and in most instances, exclusively.

Search Console responds to this change and gives precedence to:

The mobile usability diagnostics.

Crawling problems and rendering of mobile.

Any difference in desktop and mobile experiences has a direct impact on the indexing as well as ranking stability. Places that consider mobile as a secondary experience are slowly losing out.

Core Web Vitals have reached a critical mark. High CWV scores in the past years were a competitive edge. In 2026, they are a competitive liability to fail them.

Google currently employs UX signals to find out:

Which pages can be featured at the top of the SERP?

What is not in the enhanced search features?

The Experience reports of Search Console give a first-hand look at where your site falls below or above these thresholds.

Schema markup was formerly concerned with rich results. It is concerning machine comprehension in 2026.

Formatted information assists Google:

Identify entities

Recycle information in generative answers.

Search Console is the key component in the system because it validates schema, identifies mistakes, and displays enhancement performance. Unclean structured data pages will be more suspicious to the AI system and can be reused with greater difficulty.

Search Console has been integrated with:

Google Analytics 4 (conversion)

Search Console Insights (content performance stories)

Such unification enables teams to follow the search engagement through the entirety of a search interaction, including impression, engagement, and outcome, to make SEO decisions that are more informed and defensible.

Performance report is the most common part of Google Search Console - but in 2026, its understanding will be more nuanced than it has ever been.

Impressions

Impressions can be defined as the frequency of a page in your site in search results. In 2026, this includes:

Conventional organic directories.

Expanded SERP features

The increase in impressions without clicks is usually a sign of an augmented AI, but not gains in rankings.

Clicks

Clicks are a measure of the acquisition of traffic- but they are no longer the complete story. AI summaries often provide answers to queries made by the user without the need to make a click, particularly for informational purposes.

Click-Through Rate (CTR)

CTR remains an indicator of the strength of your listing, but the dropping CTR does not necessarily indicate low-quality optimization. It can also signal:

SERP layout changes

Before a click is made, query intent is met.

Average Position

Mean position has been made a relative measure. Involving dynamic SERP, AI blocks, and mixed types of results, a position of 3 does not imply that it will be seen above the fold.

Very deep impressions and low CTR = very relevant, not very much of an incentive to click.

Intent mismatch or content rot spoilage, Query-level drops.

SearchConsole is now not so much a traffic meter but a trust indicator- where Google thinks your content would fit into the search ecosystem.

The Indexing report shows how Google indexes your URLs, and in 2026, it will be about classification than discovery.

Indexed

Indexed pages can get displayed in:

Organic search results

Enhancements and rich features.

The least level of visibility is indexation.

Discovered - Not Indexed.

Google knows the existence of these URLs but has not visited them and indexed them. Common causes include:

Crawl budget constraints

Perceived low priority

Poor content signaling is one of the problems that affect these pages.

Crawled - No Indexed.

This status is critical in 2026. It implies that Google went through the page and decided not to index the page.

Typical reasons:

Thin or redundant content

Poor perceived value as compared to similar pages.

These URLs need qualitative enhancement - not technical solutions.

Excluded

Intentionally blocked pages through:

no index tags

robots.txt rules

This type is wholesome when made purposeful--and catastrophic when by chance.

AI systems refer to indexed trustworthy material only. The pages that do not pass the quality classification of Google are practically invisible in an AI-based search experience.

The only place where this decision-making is somewhat transparent is Search Console.

User experience has become a filtering level of ranking and AI eligibility.

Largest Contentful Paint (LCP): Perceived loading speed.

Cumulative Layout Shift (CLS): Visual stability

Interaction to Next Paint (INP): Sensibility in the real world.

Search Console is not gamma-ray simulated; these metrics are based on real user metrics--they cannot be gamed.

Issues such as:

Touch elements are too close

Font readability problems

Reduce the rankings and engagement directly.

Still mandatory. Still enforced. Still visible in GSC.

Google Search Console now has an excellent strategy in place that spares the need to say much: the Enhancements section of the platform has become one of the most strategically significant in 2026, in particular.

In the past, this report has primarily been used to debug schema errors to allow websites to be awarded rich snippets such as star ratings or frequently asked questions. It is not the whole picture anymore. Nowadays, the AI systems in Google are conscious of structured data in the way that they comprehend, trust, and repackage your content.

The Enhancements tab gives you access to the visibility of all the found structured data types on your site, which include, but are not limited to:

FAQ

Video

Under each type of enhancement, Search Console indicates:

Valid items

Pages affected by each issue

Google is tougher than ever in 2026. The way it used to be: You know a warning is not harmful anymore, it merely indicates to you that your content is not fully usable in AI-driven features.

Organized information now affects:

Eligibility for AI Overviews.

Money money eligibility (where still applicable)

When used with AI systems, content that is preferred is:

Clearly segmented

Machine-readable

Schema markup does just that.

The unorganized data can also be ranked to a page, yet it has a much lower chance of being:

Quoted

Highlighted

Google only tells you whether you have broken structural data or usable data in Search Console.

In 2025-2026, Google did not release flashy and headline-making changes to Search Console. Rather, it achieved silent yet significant enhancements that benefit attentive teams.

Search Console interface is clean, faster, and better organized into groups. Reporting loads quicker, navigation is not as cluttered, and documentation links are clearer.

This is important since insights are fixed faster the faster the access to them, particularly in the case of indexing or UX problems.

The expansion is the third step that will be undertaken using the Search Console API v2.

Search console API v2 API is a significant innovation to advanced teams.

It now supports:

Core Web Vitals metrics

Enhanced page and query-level reporting.

This enables:

Automated SEO reporting

Combination with internal BI tools.

To agencies and larger businesses, this is the scale of SEO without having to work on it manually.

In case of global and multilingual sites, Search Console now provides better insights on:

hreflang implementation

Indexing issues by locale

Poorly set up international targeting can silently destroy performance in entire regions. Such updates make such problems more readily caught--and fixed.

Manual operations and security concerns have now been described in more detail, and their remedies are made clearer.

Site owners receive general warnings instead of:

Certain descriptions of problems.

Clear recovery guidance

Such transparency minimizes downtimes and the ranking of damage in case of problems.

Search console insights are now an inseparable part of the primary GSC dashboard and transform raw data into formatable performance stories.

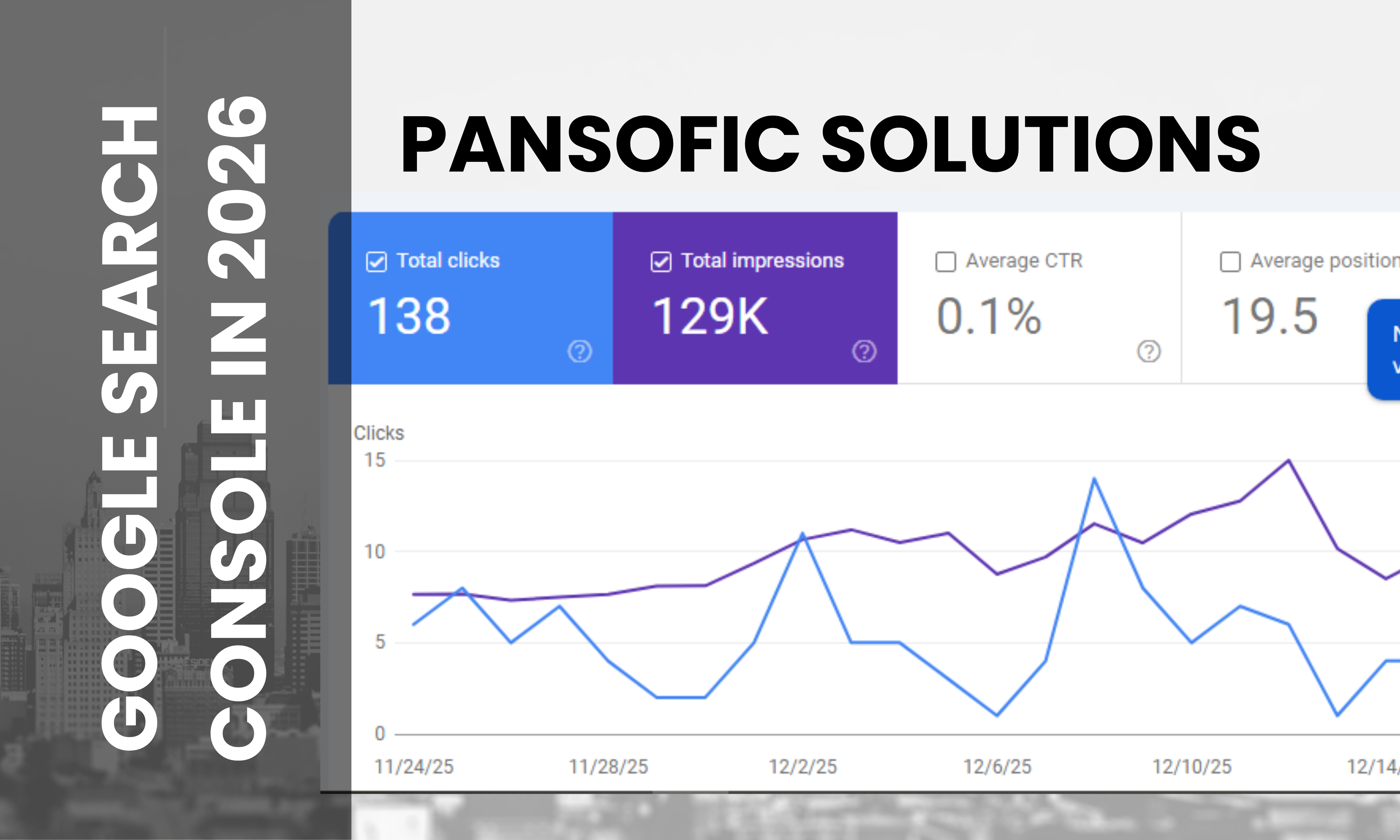

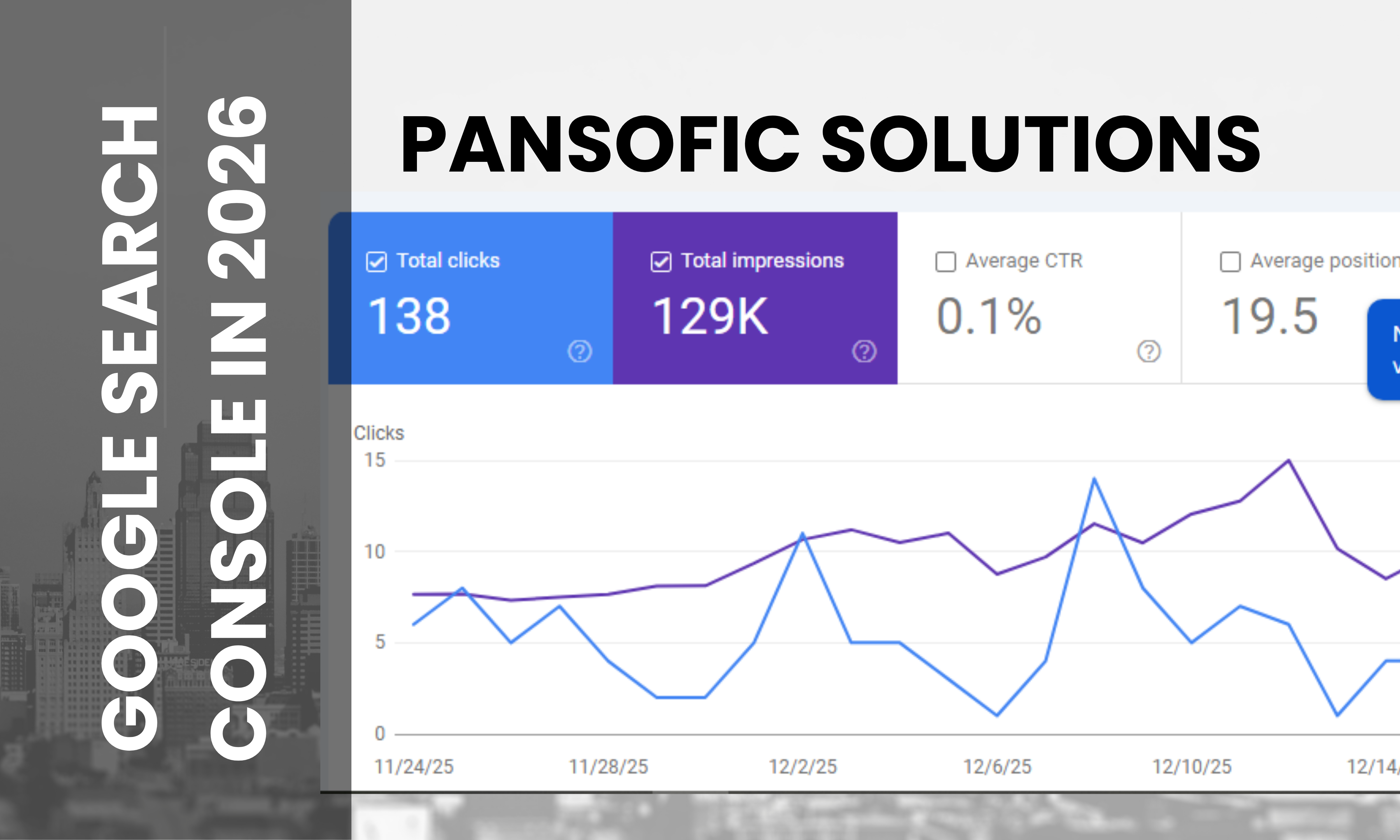

Latest Insights statistics related to pansofic.com indicate:

Clicks: 138 clicks in the past 28 days (|human|>Clicks: 138 clicks within the past 28 days (|human|>Clicks: 138 clicks in the last 28 days (|human|>Clicks: 138 clicks in the past 28 days (|human|)

Trending Down: Content in which the service provider holds a legacy.

On the one hand, the increasing speed of impressions in comparison to clicks may appear alarming. This is, in fact, textbook search behavior in AI era.

Key takeaways:

The larger the number of impressions, the larger the topical relevance and wider query matching.

The falling pages are a sign that the content is getting old--not punishments and technical problems.

Search Console Insights allows you to find out:

What issues should be expanded on?

It is where authority is being established gradually.

This transforms content planning into planning.

Search Console can only provide value through intentional usage. By 2026, it should be the strategic monitoring system of businesses, rather than a monthly check-up.

Connections between GSC and GA4 make a full-funnel analyst:

Search query - page impression.

Engagement - conversion

This relationship is necessary because of:

Proving SEO ROI

Optimizing on actual user activity.

It is half the picture without the integration of GA4.

SEO reporting done manually does not scale.

By using:

Search Console API v2

Looker Studio dashboards

Businesses can:

Track the performance patterns automatically.

Share insights across teams

Search Console is transformed into an early warning system through automation.

Search Console identifies two important opportunities:

Content gaps

Questions that your site is showing up on, but not answering.

Keywords that have impressions and poor involvement.

Content decay

The pages are losing impressions or clicks over time.

Slipping of the rankings because of old information.

Revising currently existing content in 2026 tends to be better than creating pages.

Search behavior isn't static.

GSC allows businesses to:

Identify seasonal spikes

Conform campaigns to demand curves.

This comes in handy particularly in SaaS, B2B services, and trend-driven industries.

Even the veteran teams make the same mistakes.

It did not happen that these pages were rejected. They did not pass a quality test or relevancy test. Their solution consists of improved content, rather than technical adjustments.

Soft 404s often indicate:

Thin pages

Poor intent alignment

They ought to be rewritten, combined, and redirected.

The falls in CTR frequently represent reformatting of SERP or AI responses- not ranking losses. This is to ensure that impressions and positions are always checked.

Incorrect hierarchical information excludes pages when it comes to improvements and artificial intelligence yielding. These mistakes are direct inhibitors of visibility.

The more time it takes to resolve these problems, the more difficult regaining becomes to regain. They must take immediate action.

Search console is obviously heading towards predictive and advisory features.

The developments that are likely to occur include:

Optimization recommendations based on AI.

Greater association with Ads and GA4.

The way to go is evident: less reactive SEO, more proactive visibility management.

Let's call it what it is.

Google Search Console is no longer an option in 2026, and it certainly is not merely an SEO tool. It is your control tower for organic presence in an AI-driven search platform.

It tells you:

The Google interpretation of your content.

The interaction of AI systems with your site.

When your business relies on organic discovery, and you are not actively engaged in Search Console, then you are operating on blind streets, as your competitors are operating with data.

No sugar-coating. No excuses.

Need assistance with the analysis, repair, or scaling of your Search console strategy of 2026?

Call Pansofic Solutions and change knowledge into action.

The free Google tool, Google Search Console, enables website owners to keep track of the way their website looks in search results. It is vital in 2026 because of the knowledge of AI visibility, indexing errors, optimizing the user experience, and the competitive search presence.

GSC displays what queries elicit impressions, how structured data is doing, and which pages are becoming visible, important inputs to improve content to give an AI response.

Relevance and trust are indicated by impressions. Raw traffic is of less significance than visibility in the case of AI-intensive SERPs.

Formatted data assists AI software in uncovering facts, interpreting things, and replicating material in concise solutions.

Yes. GSC allows tracking the performance of featured answers and AI summaries optimization by following the trends of rich results, schema performance, and CTR.

Yes. Connecting GSC to GA4 will allow analysis of queries through conversion.

They serve as a qualification layer. Pages with low CWV have a hard time ranking or being featured in AI functionality.

Absolutely. It presents performance, indexation rankings, and engagement rates of pages written by AIs.

Yes. It offers hreflang diagnostics and region performance.

Sensitivity to non-indexed pages, misinterpretation of CTR decreases, fixing schema errors are not repaired, and fixing security issues takes more time.